Word similarity using product discount data

Among all the branches of machine learning I'm really interested in unsupervised learning, basically discovering structure in the data without any specific purpose. It is already being used as a way to "bootstrap" other models, extracting features from data and then using a supervised model to put labels on it using training examples.

Some months ago reading about different models I came across Word2vec. Word2vec is a model that let you learn features from text, created by Tomas Mikolov and his friends from Google and detailed in the paper Distributed Representations of Words and Phrases and their Compositionality. Some people say it's semi-supervised because it uses a target function to learn the context of every word in the corpus. It's based on a shallow neural network (1 hidden layer) which is really fast to train and gives you a matrix of M x N (M: vocabulary size and N: number of features). Studying the distance between different vectors that compose this matrix we can find which words are used in similar contexts.

I decided to give it a try with a dataset from Oony, a deal-aggregator service I created with Cristian Hentschel. We collected data from more than 150M coupons and products on discount published around the world. As the dataset is quite large I just used a subset from the UK and only in the electronics & technology category.

Some characteristics about the dataset:

- 1132643 records.

- I used only the title and description field.

- Title average length is 61 chars.

- Description average length is 94 chars.

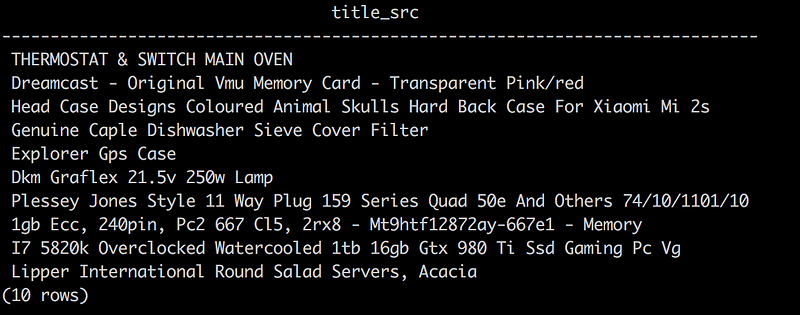

Example data

Example data

Questions:

- Is there any structure in the data?

- Will it work well with short titles and descriptions from deals?

Getting the word embedding

- Ran a Python script to extract a text dump of title+description from a PostgreSQL database where all the deals are stored.

- Stripped html tags, converted all to lowercase and removed stopwords using NLTK. Applied the Wordnet lemmatizer to reduce the vocabulary size.

- Saved a 136MB file to disk with a concatenation of all the processed text.

- I used Tensorflow with a modified version of the word2vec_optimized script, setting the window_size to 2 and embedding size to 200. (I also tried gensim but found the Tensorflow version faster).

- The vocabulary size was of 131718 words and ran the training set for 15 epochs.

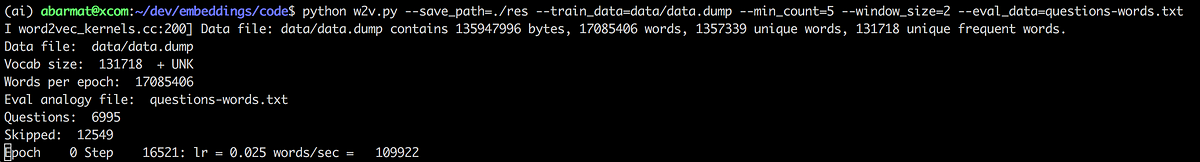

Word2vec using Tensorflow

Word2vec using Tensorflow

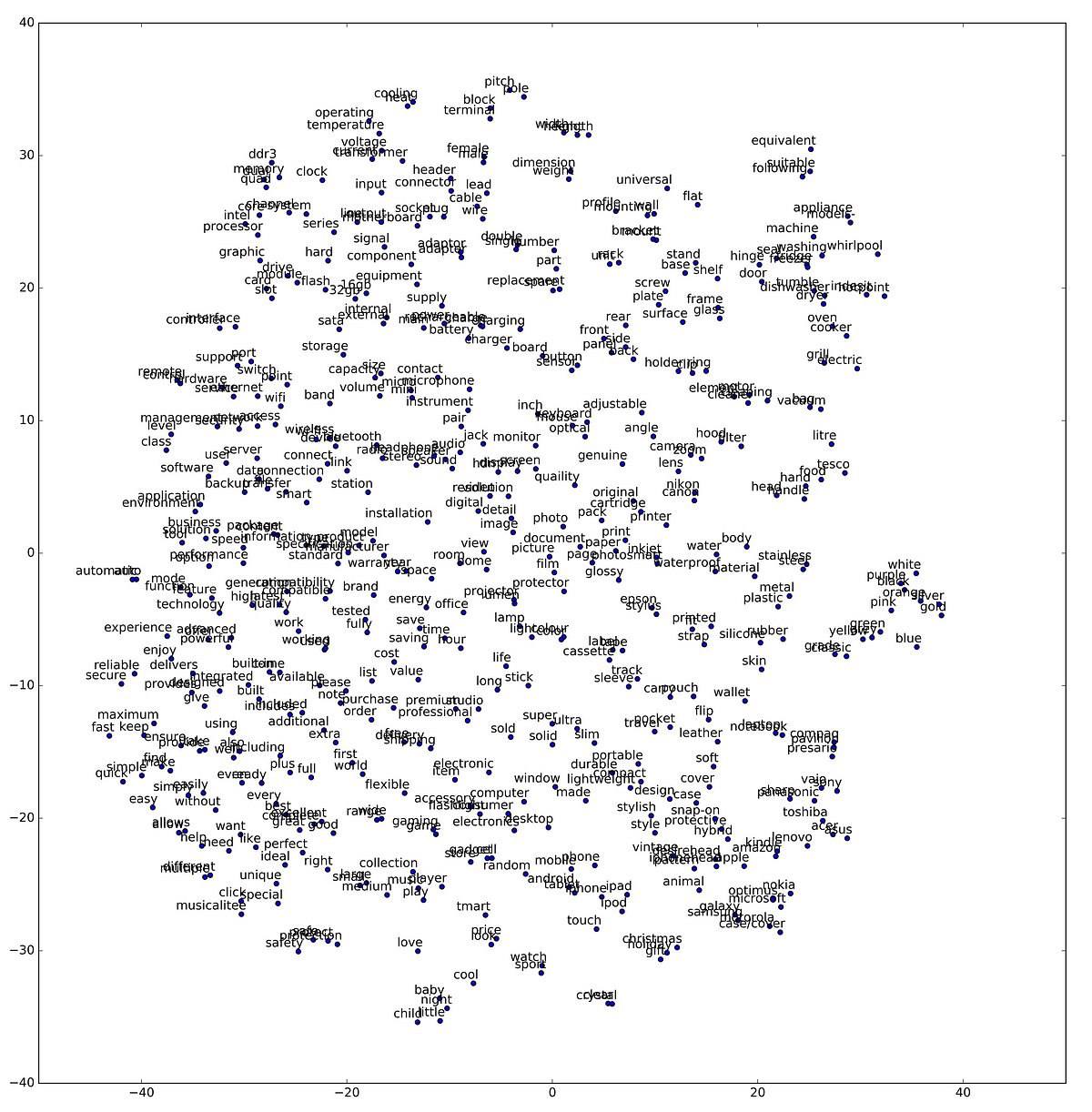

Visualization

As a result of processing the dataset through Word2vec I got a matrix of 131718 x 200. As it's high dimensional data and impossible to visualize I applied a t-SNE transformation to reduce the matrix and projecting it into a plane. I selected only the top 500 words by frequency from the vocabulary and ran the transformation with a perplexity of 30 and 5000 iterations (tried with different values to see what looked good). There's a good guide on interpreting t-SNE and its hyper-parameters on the "interesting resources" section below.

Conclusions

From the chart we can see clumps of words which share similar contexts, for instance colors on the middle right side, computer parts on the top left, and electronic brands on the lower right. There's a bunch of selling/marketing words (perfect, unique, ideal, special, best, great, excellent) on the lower left.

Some applications of the embedding info I can think of are: using it for improving search relevance of related terms, creating relationship between different deals, identifying entities which can help tag different attributes of the deals and products.

Other experiments that would be nice to test are: trying with different embedding/window size, running the process without removing stopwords to see if it retains context information better, using a much larger dataset to see what structure emerge.